Christian is a figure of Debian, not only because of the tremendous coordination work that he does within the translation project, but also because he s very involved at the social level. He s probably in the top 5 of the persons who attended most often the Debian conference.

Christian is a friend (thanks for hosting me so many times when I come to Paris for Debian related events) and I m glad that he accepted to be interviewed. He likes to speak and that shows in the length of his answers

but you ll be traveling the world while reading him.

My questions are in bold, the rest is by Christian.

Who are you?

I am a French citizen (which is easy to guess unless you correct my

usual mistakes in what follows). I m immensely proud of being married

for nearly 26 years with Elizabeth (who deserves a statue from Debian

for being so patient with my passion and my dedication to the

project).

I m also the proud father of 3 wonderful kids , aged 19 to

23.

I work as team manager in the Networks and Computers Division of Onera

the French Aerospace lab , a public research institute about

Aeronautics, Space and Defense. My team provides computer management

services for research divisions of Onera, with a specific focus put on

individual computing.

I entered the world of free software as one of the very first users of

Linux in France. Back in the early 1990 s, I happened (though the BBS

users communities) to be a friend of several early adopters of Linux

and/or BSD386/FreeBSD/NetBSD in France. More specifically, I

discovered Linux thanks with my friend Ren Cougnenc (all my free

software talks are dedicated to Ren , who passed away in 1996).

You re not a programmer, not even a packager. How did you come

to Debian?

I m definitely not a programmer and I never studied computing (I

graduated in Materials Science and worked in that area for a few years

after my PhD).

However, my daily work always involved computing (I redesigned the

creep testing laboratory and its acquisition system all by myself

during my thesis research work). An my hobbies often involved

playing with home computers, always trying to learn about something

new.

So, first learning about a new operating system then trying to

figure out how to become involved in its development was quite a

logical choice.

Debian is my distro of choice since it exists. I used Slackware on work

machines for a while, but my home server, kheops,

first ran Debian 1.1 when I stopped running a BBS on an MS-DOS machine

to host a news server. That was back in October 1996.

I then happened to be a user, and more specifically a user of

genealogy software, also participating very actively in Usenet from

this home computer and server, that was running this Debian thing.

So, progressively, I joined mailing lists and, being a passionate

person, I tried to figure out how I could bring my own little

contribution to all this.

This is why I became a packager (yes, I am one!) by taking over the

geneweb package, which I was using to publish my genealogy

research. I applied as DD in January 2001, then got my account in July

2001. My first upload to the Debian archive occurred on August 22nd

2001: that was of course geneweb, which I still maintain.

Quite quickly, I became involved in the work on French localization. I

have always been a strong supporter of localized software (I even

translated a few BBS software back in the early 90 s) as one of the

way to bring the power and richness of free software to more users.

Localization work lead me to work on the early version of Debian

Installer, during those 2003-2005 years where the development of D-I

was an incredibly motivating and challenging task, lead by Joey Hess

and his inspiring ideas.

From user to contributor to leader, I suddenly discovered, around

2004, that I became the coordinator of D-I i18n (internationalization)

without even noticing

You re the main translation coordinator in Debian. What plans

and goals have you set for Debian Wheezy?

As always: paint the world in red.

Indeed, this is my goal for years. I would like our favorite distro to

be able to be used by anyone in the world, whether she speaks

English, Northern Sami, Wolof, Uyghur or Secwepemcts n.

As a matter of symbol, I use the installer for this. My stance is that

one should be able to even install Debian in one s own language. So,

for about 7 years, I use D-I as a way to attract new localization

contributors.

This progress is represented on

this

page where the world

is gradually painted in red as long as the installer supports more

languages release after release. The map above tries to illustrate

this by painting in red countries when the most spoken language in the

country is supported in Debian Installer.

However, that map does not give enough reward to many great efforts

made to support very different kind of languages. Not only various

national languages, but also very different ones: all regional

languages of Spain, many of the most spoken languages in India,

minority languages such as Uyghur for which an effort is starting,

Northern Sami because it is taught in a few schools in Norway, etc.,

etc.

Still, the map gives a good idea of what I would like to see better

supported: languages from Africa, several languages in Central

Asia. And, as a very very personal goal, I m eagerly waiting for

support of Tibetan in Debian Installer, the same way we support its

sister language, Dzongkha from Bhutan.

For this to happen, we have to make contribution to localization as

easy as possible. The very distributed nature of Debian development

makes this a challenge, as material to translate (D-I components,

debconf screens, native packages, packages descriptions, website,

documentation) is very widely spread.

A goal, for years, is to set a centralized place where

translators could work easily without even knowing about SVN/GIT/BZR

or having to report bugs to send their work. The point, however, would

be to have this without making compromises on translation quality. So,

with peer review, use of thesaurus and translation memory and all such

techniques.

Tools for this exist: we, for instance, worked with the developers of

Pootle to help making it able to cope with the huge amount of material

in Debian (think about packages descriptions translations). However, as

of now, the glue between such tools and the raw material (that often

lies in packages) didn t come.

So, currently, translation work in Debian requires a great knowledge

of how things are organized, where is the material, how it can be

possible to make contribution reach packages, etc.

And, as I m technically unable to fulfill the goal of building the

infrastructure, I m fulfilling that role of spreading out the

knowledge. This is how I can define my coordinator role.

Ubuntu uses a web-based tool to make it easy to contribute

translations directly in Launchpad. At some point you asked Canonical to

make it free software. Launchpad has been freed in the mean time. Have you

(re)considered using it?

Why not? After all, it more or less fills in the needs I just

described. I still don t really figure out how we could have all

Debian material gathered in Rosetta/Launchpad .and also how Debian

packagers could easily get localized material back from the framework

without changing their development processes.

I have always tried to stay neutral wrt Ubuntu. As many people now in

Debian, I feel like we have reached a good way to achieve our mutual

development. When it comes at localization work, the early days where

the everything in Rosetta and translates who wants stanza did a lot

of harm to several upstream localization projects is, I think, way

over.

Many people who currently contribute to D-I localization were indeed

sent to me by Ubuntu contributors .and by localizing D-I, apt,

debconf, package descriptions, etc., they re doing translation work

for Ubuntu as well as for Debian.

Let s say I m a Debian user and I want to help translate Debian

in my language. I can spend 1 hour per week on this activity. What should

I do to start?

Several language teams use Debian mailing lists to coordinate their

work. If you re lucky enough to be a speaker of one of these

languages, try joining

debian-l10n-<yourlanguage>

and follow what s happening there. Don t try to immediately jump in some

translation work. First, participate to peer reviews: comment on

others translations. Learn about the team s processes, jargon and

habits.

Then, progressively, start working on a few translations: you may want

to start with translations of debconf templates: they are short, often

easy to do. That s perfect if you have few time.

If no language team exists for your language, try joining

debian-i18n

and ask about existing effort for your language. I may be able to

point you to individuals working on Debian translations (very often

along with other free software translation efforts). If I am not, then

you have just been named coordinator for your language

I may

even ask you if you want to work on translating the Debian Installer.

What s the biggest problem of Debian?

We have no problems, we only have solutions

We are maybe facing a growth problem for a few years. Despite the

increased welcoming aspects of our processes (Debian Maintainers),

Debian is having hard times in growing. The overall number of active

contributors is probably stagnating for quite a while. I m still

amazed, however, to see how we can cope with that and still be able to

release over the years. So, after all, this is maybe not a

problem

Many people would point communication problems here. I don t. I

think that communication inside the Debian project is working fairly

well now. Our famous flame wars do of course still happen from time

to time, but what large free software project doesn t have flame wars?

In many areas, we indeed improved communication very significantly. I

want to take as an example the way the release of squeeze has been

managed. I think that the release team did, even more this time, a

very significant and visible effort to communicate with the entire

project. And the release of squeeze has been a great success in that

matter.

So, there s nearly nothing that frustrates me in Debian. Even when a

random developer breaks my beloved 100% completeness of French

translations, I m not frustrated for more than 2 minutes.

You re known in the Debian community as the organizer of the

Cheese & Wine Party during DebConf. Can you tell us what this is

about?

This is an interesting story about how things build themselves in

Debian.

It all started in July 2005, before DebConf 5 in Helsinki. Denis

Barbier, Nicolas Fran ois and myself agreed to bring at Debconf a few

pieces of French cheese as well as 1 or 2 bottles of French wine and

share them with some friends. Thus, we settled an informal meeting in

the French room where we invited some fellows: from memory, Benjamin

Mako Hill, Hannah Wallach, Matt Zimmermann and Moray Allan. All of

us fond of smelly cheese, great wine plus some extra p t

home-made by Denis in Toulouse.

It finally happened that, by word of mouth, a few dozens of other

people slowly joined in that French room and turned the whole thing

into an improvized party that more or less lasted for the entire

night.

The tradition was later firmly settled in 2006, first in Debconf 6 in

Mexico where I challenged the French DDs to bring as many great cheese

as possible, then during the Debian i18n meeting in Extremadura (Sept

2006) where we reached the highest amount of cheese per participant

ever. I think that the Creofonte building in Casar de C ceres hasn t

fully recovered from it and is still smelling cheese 5 years after.

This party later became a real tradition for DebConf, growing over

and over each year. I see it as a wonderful way to illustrate the

diversity we have in Debian, as well as the mutual enrichment we

always felt during DebConfs.

My only regret about it is that it became so big over the years that

organizing it is always a challenge and I more and more feel pressure

to make it successful. However, over the years, I always found

incredible help by DebConf participants (including my own son, last

year a moment of sharing which we will both remember for years, i think).

And, really, in 2010, standing up on a chair, shouting (because the

microphone wasn t working) to thank everybody, was the most emotional

moment I had at Debconf 10.

Is there someone in Debian that you admire for their

contributions?

So many people. So, just like it happens in many awards ceremonies, I

will be very verbose to thank people, sorry in advance for this.

The name that comes first is Joey Hess. Joey is someone who has a

unique way to perceive what improvements are good for Debian and a

very precise and meticulous way to design these improvements. Think

about debconf. It is designed for so long now and still reaching its

very specific goal. So well designed that it is the entire basis for

Joey s other achievement: designing D-I. Moreover, I not only admire

Joey for his technical work, but also for his interaction with

others. He is not he loudest person around, he doesn t have to .just

giving his point in discussion and, guess what? Most of the time, he s

right.

Someone I would like to name here, also, is Colin Watson. Colin is

also someone I worked with for years (the D-I effect, again ) and,

here again, the very clever way he works on technical improvements as

well as his very friendly way to interact with others just make it.

And, how about you, Rapha l?

I m really admirative of the way you

work on promoting technical work on Debian. Your natural ability to

explain things (as good in English as it is in French) and your

motivation to share your knowledge are a great benefit for the

project. Not to mention the technical achievements you made with

Guillem on dpkg of course!

Another person I d like to name here is Steve Langasek. We both

maintain samba packages for years and collaboration with him has

always been a pleasure. Just like Colin, Steve is IMHO a model to

follow when it comes at people who work for Canonical while continuing

their involvment in Debian. And, indeed, Steve is so patient with my

mistakes and stupid questions in samba packaging that he deserves a

statue.

We re now reaching the end of the year where Stefano Zacchiroli was

the Debian Project Leader. And, no offense intended to people who were

DPL before him (all of them being people I consider to be friends of

mine), I think he did the best term ever. Zack is wonderful in sharing

his enthusiasm about Debian and has a unique way to do it. Up to the

very end of his term, he has always been working on various aspects of

the project and my only hope is that he ll run again (however, I would

very well understand that he wants to go back to his hacking

activities!). Hat off, Zack!I again have several other people to name in this Bubulle hall of

Fame : Don Armstrong, for his constant work on improving Debian BTS,

Margarita Manterola as one of the best successes of Debian Women (and

the most geeky honeymoon ever), Denis Barbier and Nicolas Fran ois

because i18n need really skilled people, Cyril Brulebois and Julien

Cristau who kept X.org packaging alive in lenny and squeeze, Otavio

Salvador who never gave up on D-I even when we were so few to care

about it.

I would like to make a special mention for Frans Pop. His loss in 2010

has been a shock for many of us, and particularly me. Frans and I had

a similar history in Debian, both mostly working on so-called non

technical duties. Frans has been the best release manager for D-I (no

offense intended, at all, to Joey or Otavio .I know that both of

them share this feeling with me). His very high involvment in his work

and the very meticulous way he was doing it lead to great achievements

in the installer. The Installation Guide work was also a model and

indeed a great example of non technical work that requires as many

skills as more classical technical work. So, and even though he was

sometimes so picky and, I have to admit, annoying, that explains why

I m still feeling sad and, in some way, guilty about Frans loss.

One of my goals for wheezy is indeed to complete some things Frans

left unachieved. I just found one in bug

#564441: I will make this

work reach the archive, benefit our users and I know that Frans would

have liked that.

Thank you to Christian for the time spent answering my questions. I hope you enjoyed reading his answers as I did.

Subscribe to my newsletter to get my monthly summary of the Debian/Ubuntu news and to not miss further interviews. You can also follow along on

Identi.ca,

Twitter and

Facebook.

7 comments Liked this article? Click here. My blog is Flattr-enabled.

I got a call from Mum today to inform me that my grandmother had passed away

last night, Brisbane time, at 94 years of age.

I got a call from Mum today to inform me that my grandmother had passed away

last night, Brisbane time, at 94 years of age.

If you want to get all genealogically correct, she's technically my

step-maternal grandmother, but she's the grandmother I spent the most time

with, growing up, so I'm not going to split hairs.

It's come as a bit of a shock, because despite having had progressively worse

dementia for probably over a decade, she's been in incredibly good physical

condition. I think it was in her 70's that she had a heart valve replaced, and

I for one had been expecting that to be the thing to wear out and take her

down slowly. I last saw her in the flesh in January, when the above photo

was taken, and more recently a few weeks ago on Skype.

She was recently moved from an aged-care hostel in Toowoomba to a nursing

home in Brisbane, as her dementia had progressed beyond the point that she

could be cared for in the previous facility.

Mum had visited her earlier in the day yesterday, and she was fine, but

apparently the staff found her unconscious at around 5pm when they went to

get her for dinner. She was taken to the Royal Brisbane and Women's Hospital

where it was determined she had suffered from a stroke, and she passed away

later that evening at around 11:30pm.

So all in all, she had a very good innings, and of all the ways to leave

this world, this was one of the quicker and more painless ways to do it.

That's the problem with a quick exit - it's usually completely unexpected.

It's looking like the funeral will be on Friday, and I've got a flight

tentatively booked to leave on Wednesday and get in on Friday morning and

return again on Monday. At the time I arranged this, I'd completely

forgotten that I was flying to Calgary on Monday night to babysit the son of

friends, while they go to the US Consulate for a visa appointment.

Fortunately this travel doesn't conflict with that travel, it just means

I'll be away from work for longer, and spending a lot of time inside

pressurized tubes. I'm fairly confident with all of the short international

trips, I'm going to look like a drug mule or something, and someone's going

to thoroughly cavity search me.

If you want to get all genealogically correct, she's technically my

step-maternal grandmother, but she's the grandmother I spent the most time

with, growing up, so I'm not going to split hairs.

It's come as a bit of a shock, because despite having had progressively worse

dementia for probably over a decade, she's been in incredibly good physical

condition. I think it was in her 70's that she had a heart valve replaced, and

I for one had been expecting that to be the thing to wear out and take her

down slowly. I last saw her in the flesh in January, when the above photo

was taken, and more recently a few weeks ago on Skype.

She was recently moved from an aged-care hostel in Toowoomba to a nursing

home in Brisbane, as her dementia had progressed beyond the point that she

could be cared for in the previous facility.

Mum had visited her earlier in the day yesterday, and she was fine, but

apparently the staff found her unconscious at around 5pm when they went to

get her for dinner. She was taken to the Royal Brisbane and Women's Hospital

where it was determined she had suffered from a stroke, and she passed away

later that evening at around 11:30pm.

So all in all, she had a very good innings, and of all the ways to leave

this world, this was one of the quicker and more painless ways to do it.

That's the problem with a quick exit - it's usually completely unexpected.

It's looking like the funeral will be on Friday, and I've got a flight

tentatively booked to leave on Wednesday and get in on Friday morning and

return again on Monday. At the time I arranged this, I'd completely

forgotten that I was flying to Calgary on Monday night to babysit the son of

friends, while they go to the US Consulate for a visa appointment.

Fortunately this travel doesn't conflict with that travel, it just means

I'll be away from work for longer, and spending a lot of time inside

pressurized tubes. I'm fairly confident with all of the short international

trips, I'm going to look like a drug mule or something, and someone's going

to thoroughly cavity search me.

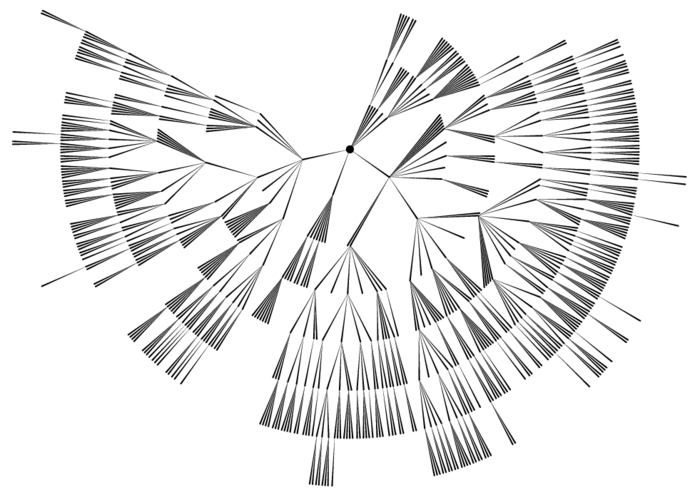

Over the course of the last five years, some relatives of me and I have been working on a family book which lists all descendants of my grandgrandgrandgrandfather Isaak Riehm, born 1799, and his wife Charlotte. My task was everything related to maintaining the data, running the infrastructure and generating the final book. In the end, we have collected biographical details, often with the person s live story, of 703 descendants, 285 partners and 416 parents-in-law. Together with an extensive preface the book now has a respectable size of 401 pages, includes 144 pictures and maps and over 200 copies have been printed and sold through the family.

Over the course of the last five years, some relatives of me and I have been working on a family book which lists all descendants of my grandgrandgrandgrandfather Isaak Riehm, born 1799, and his wife Charlotte. My task was everything related to maintaining the data, running the infrastructure and generating the final book. In the end, we have collected biographical details, often with the person s live story, of 703 descendants, 285 partners and 416 parents-in-law. Together with an extensive preface the book now has a respectable size of 401 pages, includes 144 pictures and maps and over 200 copies have been printed and sold through the family.

40319

An e-book reader where I can click on any word and enter a definition for it.

The definition will then appear as a tooltip when I hover over the word.

Take that, Iain Banks, and Neal Stephenson!

32

I want Deskview X's terminal program. The one that resizes the font when

the window is resized. Except free and modern.

With modern (ie, auto-layout tiling) window managers, this is less of a

wishlist and more of a missing necessity. Ideally, it would always keep the

window 80 columns (or some other user-defined value) wide.

I've poked around in gnome-terminal's source, and this looks fairly

doable, but I have not found the time to get down and do it.

40319

An e-book reader where I can click on any word and enter a definition for it.

The definition will then appear as a tooltip when I hover over the word.

Take that, Iain Banks, and Neal Stephenson!

32

I want Deskview X's terminal program. The one that resizes the font when

the window is resized. Except free and modern.

With modern (ie, auto-layout tiling) window managers, this is less of a

wishlist and more of a missing necessity. Ideally, it would always keep the

window 80 columns (or some other user-defined value) wide.

I've poked around in gnome-terminal's source, and this looks fairly

doable, but I have not found the time to get down and do it.

Due to their implementation by erasure, they face certain limitations.For example, the following constructor for a class with both compile time

and runtime type checking:

Due to their implementation by erasure, they face certain limitations.For example, the following constructor for a class with both compile time

and runtime type checking:

I've spent four hours listening to muzak yesterday.

Apparently, antitrust legislation prohibits T-Online from getting better access to T-Com's ticket system than their competitors. Of course that means "none at all".

I do however, disagree with their corollary that it is the customer's duty to call T-Com and tell them what is wrong.

On a Sunday evening. After a lightning strike. With a Windows update breaking PPPoE for ZoneAlarm users.

Seriously, get your processes straight.

I've spent four hours listening to muzak yesterday.

Apparently, antitrust legislation prohibits T-Online from getting better access to T-Com's ticket system than their competitors. Of course that means "none at all".

I do however, disagree with their corollary that it is the customer's duty to call T-Com and tell them what is wrong.

On a Sunday evening. After a lightning strike. With a Windows update breaking PPPoE for ZoneAlarm users.

Seriously, get your processes straight.